Learning Curve

Latest

Awesome Things

How Mike Morath Can Redeem Himself, Keep DISD Trustee Seat

Yesterday I called for DISD trustee Mike Morath to step down because he failed to pay $2 million for the sole copy of the Wu-Tang Clan album Once Upon a Time in Shaolin. Morath has so far not responded publicly to my challenge. Today I offer a way for Morath to demonstrate real leadership and show that he is for the children.

By Tim Rogers

Education

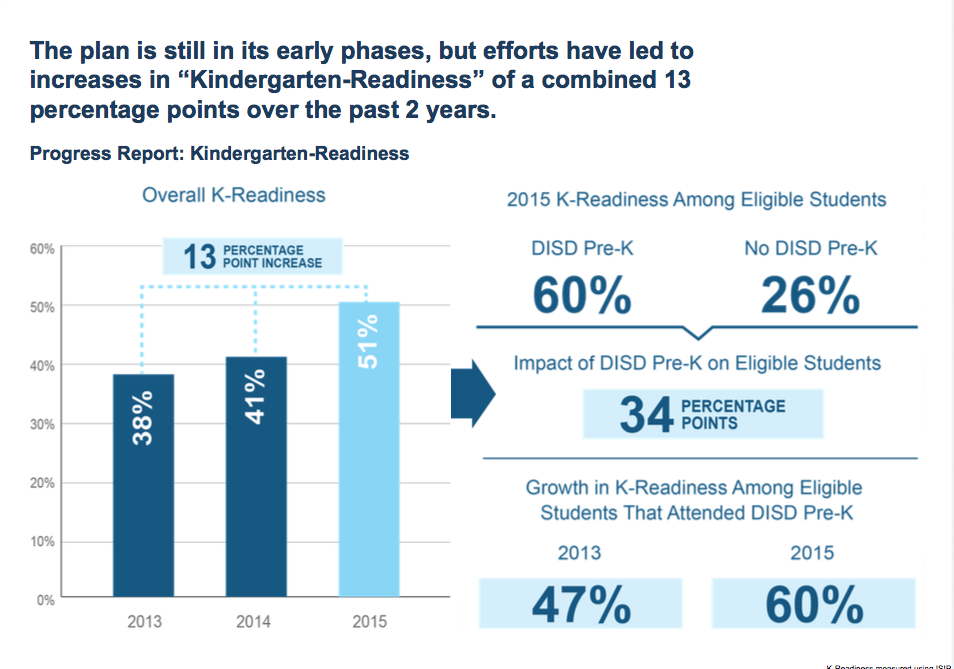

Pre-K, Money, and the Future of School Reform

Why it takes outside pressure to make a school district do the right thing

By Eric Celeste

Education

SAGA Pod: Jim Schutze on DISD, Racial Politics, and Scott Griggs

We recorded this one twice because I screwed up. Oh, well.

By Eric Celeste

Education

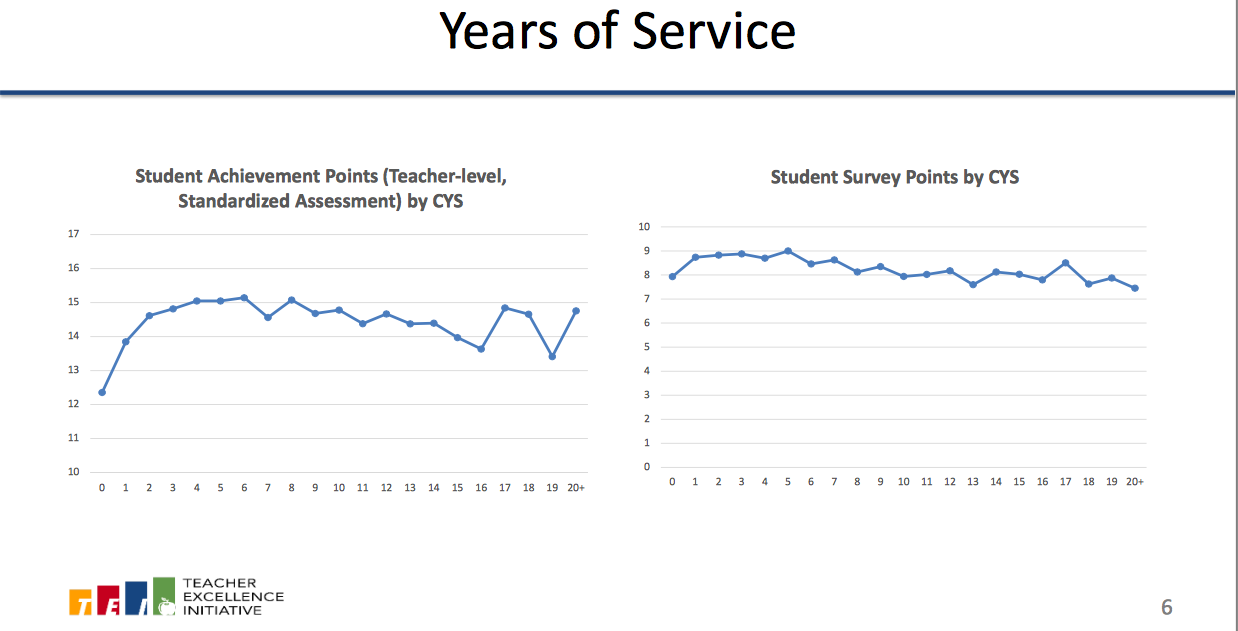

Lessons From DISD’s Merit Pay: Finding Great Teachers Takes More Than a Calendar

Experience and degrees not enough information to base pay raises on

By Eric Celeste

Education

Lessons From DISD’s New Merit Pay System: Bad Teachers Go Bye-Bye

Great teachers are finally making as much money as bad teachers

By Eric Celeste

Advertisement

Latest

Education

Reason No. 3 to Vote for the DISD bond: Because Hope is a Good Thing

Go vote, people. You only have a few hours left.

By Eric Celeste

Education

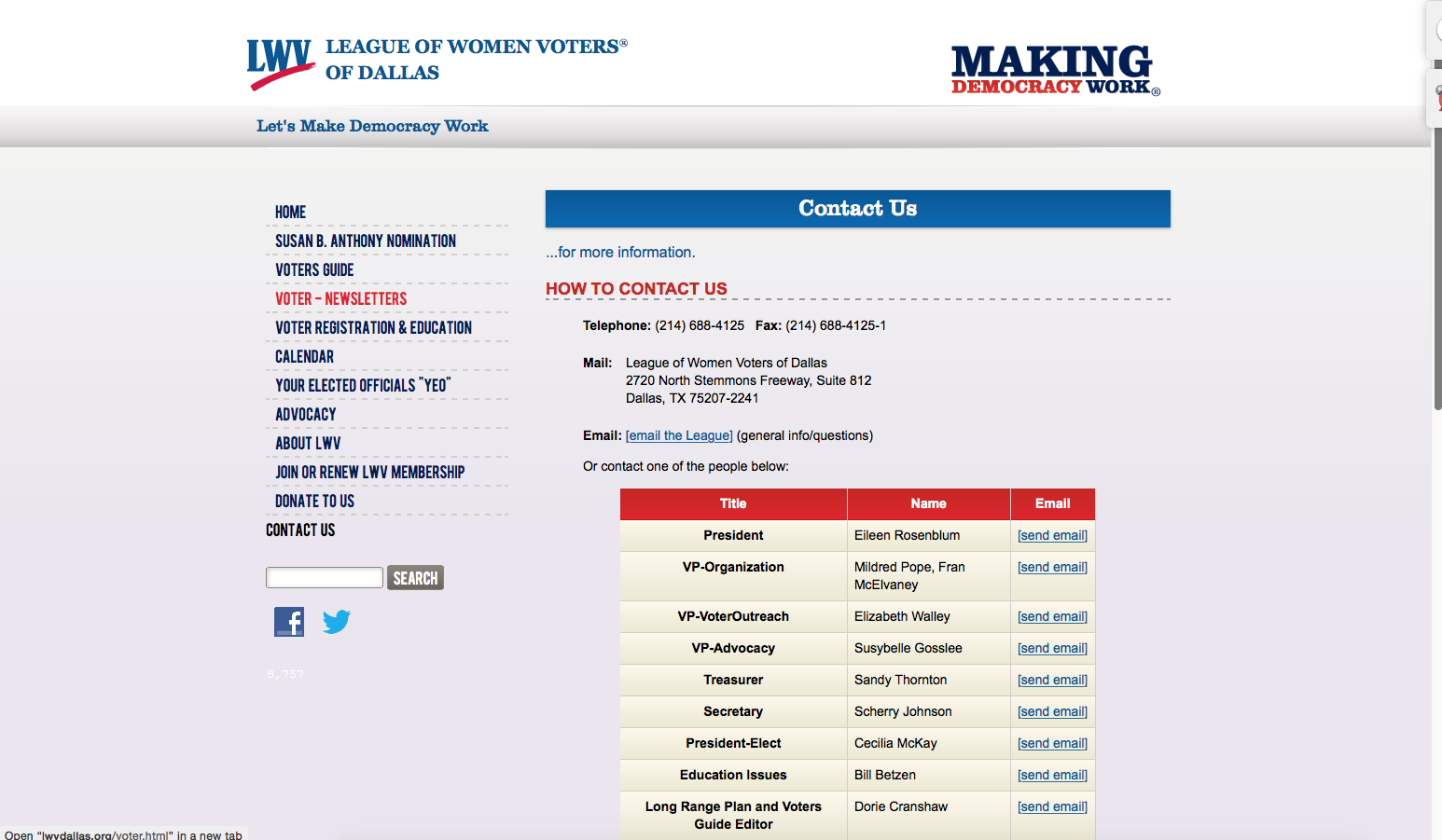

The League of Women Voters of Dallas Is Officially a Joke

I mean, Bill Betzen? Are you serious?

By Eric Celeste

Education

Reason No. 2 to Vote for the DISD Bond: It’s Fiscally Sound

It doesn't raise taxes, and DISD has been fiscally responsible the past three years

By Eric Celeste

Education

Turn and Talks Podcast: Why a Former DISD Teacher Voted For the Bond

Kids, money, race, and the demonizing of reformers

By Eric Celeste

Education

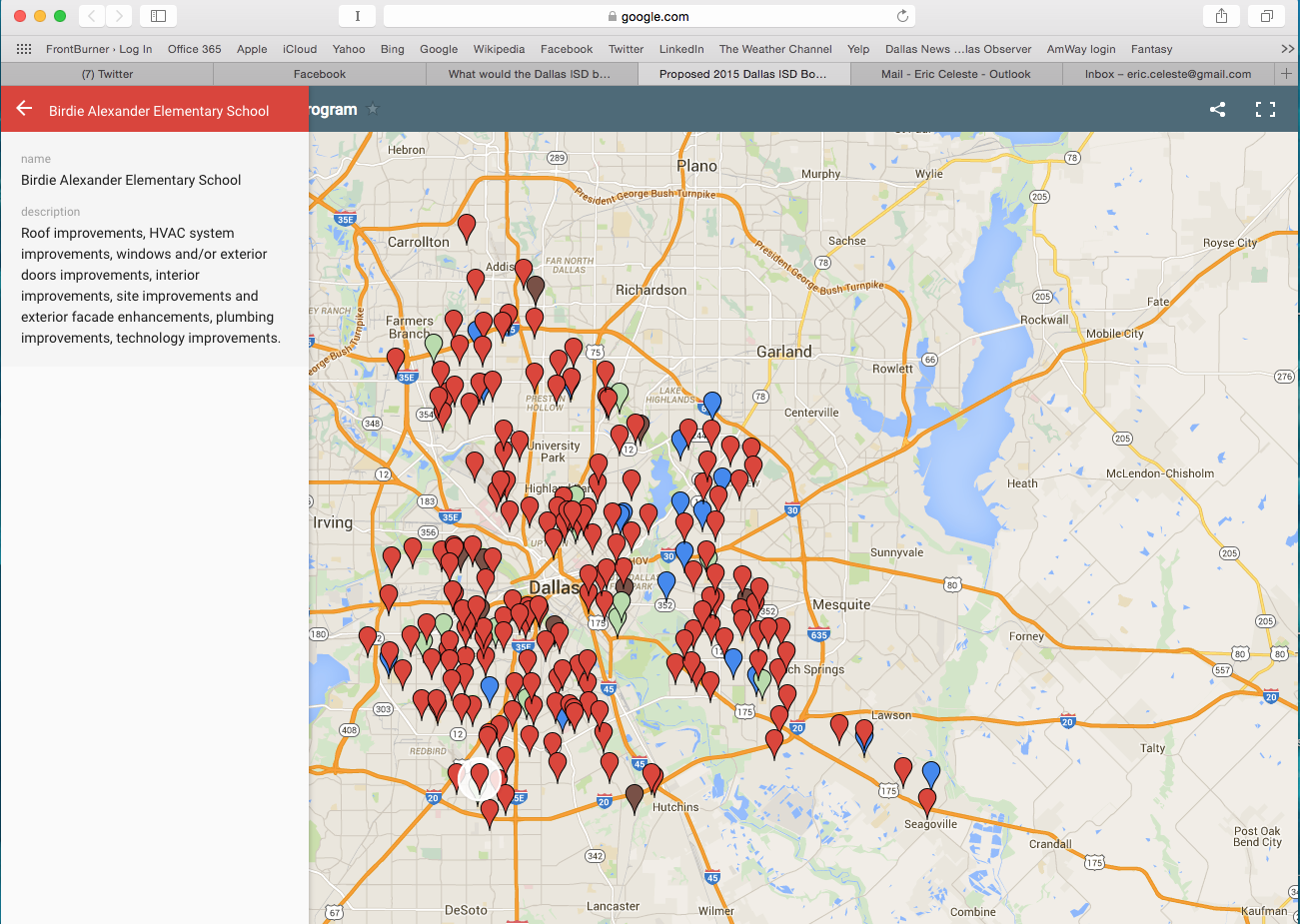

Reason No. 1 to Vote For the DISD Bond: It Helps Kids

It helps kids in every area of the city, in many different ways

By Eric Celeste